AI adoption breaking significantly across demographic groups

Plus, Trustible launches partner program, how to effectively and safely use AI, our global policy and industry round up, and what GPT-5 can tell us about AI evaluations

Hi there, Happy Wednesday, and welcome to the latest edition of the Trustible Newsletter! We have a lot to cover this week; let’s dive right in.

In today’s edition (5-6 minute read):

AI adoption uneven across demographic groups

Trustible launches global partner program

What you need to know to effectively and safely use AI

Global & U.S. policy roundup

What GPT-5 can tell us about AI evaluations?

Webinar: Building Guardrails for Enterprise AI - Exploring the Databricks AI Governance Framework and Beyond

1. AI adoption uneven across demographic groups

Recent studies from HBS and Pew show that AI adoption is uneven across demographics in ways that challenge expectations. HBS’s meta-analysis of 18 studies finds women are significantly less likely than men to use generative AI tools, with heightened skepticism over bias and risk being key factors. Pew reports that younger and more educated adults are adopting AI far faster than older adults or those with less educational attainment, with a notable 30-point gap in usage between those with a post-graduate degree (48%), and those with only a high school level (17%).

Meanwhile, an Elon University survey found some racial and ethnic gaps in LLM usage, with Black and Hispanic adults saying they use AI more often (57% and 66% respectively) than the general population (52%) of adults.

While there’s a variety of potential sources or reasons behind these disparities ranging from risk sensitivity, perceptions of bias, availability of education resources, etc, the potential consequences of sustained disparities are clear: negative feedback loops. When groups hold back from using AI, whether from distrust or lack of access, their perspectives become underrepresented in the very systems that are learning from user data and being fine-tuned on it. That dynamic could make future tools feel even less relevant to them and further undermine trust.

Key takeaway: While the tech industry is often quick to attack any form of regulation as potential burdens on or obstacles to AI growth, regulation may also be one of the key elements that unlocks adoptions from the most skeptical AI groups. This unlock is necessary to maximize the benefits of AI.

2. Trustible Launches Global Partner Program

Today, we’re announcing the global launch of the Trustible Partner Program, an ecosystem purpose built to weave AI governance through every stage of the AI lifecycle. Our program brings together technology alliances, system integrators, resellers and distributors, auditors, and insurers around a single objective: help organizations adopt AI responsibly, prove compliance, and scale value with governance at the core.

Accelerating responsible AI adoption isn’t an isolated activity by a single organization or vendor - it takes a coalition of partners to bring trusted AI from being just words on a page to operationalization. The Trustible Partner Program isn’t a passive commitment: we’re creating a working coalition of builders committed to getting AI governance done for organizations—starting today. By aligning leaders across the AI value chain, we’re delivering a connected AI governance experience that accelerates the time‑to‑value of AI and truly delivering trusted AI.

In the coming weeks, we’ll share our initial launch partners, with more to follow throughout the year. We’re opening applications today for Technology Alliances, Resellers/Distributors, System Integrators, and Strategic Alliances. If you’re building, advising, or delivering AI, and you believe governance should be built‑in, not bolted‑on, join us.

You can read more about the program on our blog.

3. What You Need to Know to Effectively and Safely Use AI

The Trump Administration's AI Action Plan proposes several policy recommendations around AI education. In this context, it’s not about the use of AI within the current education system, but rather educating our entire current and future workforce about how to use AI. Massive investments in data center infrastructure, growing model size and complexity, and agentically integrating into existing applications won’t yield returns if there are bottlenecks in human expertise for deploying, using, and governing these systems. While governments will likely take point in enacting AI education within schools, it’s worth considering what an AI literacy and education program should look like inside of an organization, and how to retrain their existing workforce to be expert ‘AI users’.

Basic Literacy - This should teach the basic vocabulary about AI, and basic tenants about the system for users. The exact technical details of AI models themselves don’t need to be covered in depth, but rather this should focus on more basic concepts like what a prompt is, how to provide appropriate context in a prompt, and what different AI tools may be broadly capable of.

Prompting Skills - Describing the exact desired output you want from an AI system isn’t always easy. Much like with computer programming, it can be challenging to figure out the exact steps you want an AI system to take, or describe your desired outcome with enough clarity for the AI system to understand. AI models don’t always have a full understanding of the world, and some relevant information about this needs to be directly provided. There are a lot of prompting techniques that can help

Risk & Governance Education - Much like how every technology enabled employees has to do an annual cybersecurity training, there should be an equivalent for using AI tools. There are some distinct AI risks, ranging from hallucinations, to system biases. Ensuring that users simply know these things are possible can itself be a challenge. Many organizations want ‘humans in the loop’ of AI systems as their main risk mitigation, but that still requires training on what to look for.

Agentic Awareness - As various forms of Agentic AI get developed and deployed, it will become essential to teach staff on what kinds of tasks are ready to be automated, and which ones still need to be done by humans because of AI system limitations, governance concerns, or even because of the business strategy and brand reputation of your organization. There will be a lot of hype from AI agents for years to come, and knowing what can be automated efficiently, will be half the battle.

Key Takeaway: LLMs can be very powerful and extremely accessible because their inputs can be natural language, but that can be a double edged sword. Most organizations won’t be able to hire easily for these AI skills, and will likely need to develop education and training programs for them themselves.

4. Global & U.S. Policy Roundup

Here is our quick synopsis of the major AI policy developments:

U.S. Federal Government. The Trump Administration is touting a deal cut with AI chip manufacturers’ Nvidia and AMD, which stipulates that the U.S. government would receive 15% of revenue from their chip sales to China. Congressional Democrats have asked the Administration to reconsider the deal due to national security concerns. The General Service Administration also announced a new program aimed at encouraging AI adoption across the federal government. The move is part of a push by the Trump Administration to modernize and automate more aspects of the federal government.

U.S. States. AI-related policy developments at the state level include:

California. OpenAI asked Governor Gavin Newsom to “consider frontier model developers compliant with its state requirements when they sign onto a parallel regulatory framework like the [EU Code of Practice] or enter into a safety-oriented agreement with a relevant US federal government agency.”

Colorado. The Colorado state legislature will return for a special session on August 21, 2025, during which it is expected to address potential changes to its AI law (SB 205). Proposed bills have been released to amend the current law, all of which would narrow its current scope. It is unclear whether a deal will be struck, as the regular legislative session adjourned without an agreement.

Florida. The Florida Bar is looking into new rules that address AI risks in the legal profession. The Bar has taken some action on how AI can be used in the practice of law, but recent advancements with AI technology have caused Bar leaders to consider additional guidance.

Texas. Texas Attorney General Ken Paxton launched an investigation into Meta and Character.ai over controversies with their chatbots. Attorney General Paxton emphasized that the companies may have engaged in “deceptive trade practices and misleadingly market[ed] themselves as mental health tools.” The Texas investigation comes shortly after Congressional Republicans announced their own investigation into Meta’s chatbots.

Africa. Google announced that it is committing $37 million to AI development across countries in Africa. The new investment is aimed at AI research and supporting local AI projects. The announcement comes as Ghana and Lesotho launch a new digital partnership to facilitate cooperation on setting digital standards and frameworks, as well as leveraging AI for agricultural support.

Asia. AI-related policy developments in Asia include:

China. Sam Altman expressed concerns over where the U.S. stands against China on AI. During a recent interview, Altman noted that he is “worried about China” and that the AI arms race between the two countries is more complex than meets the eyes. Specifically, he highlighted China’s capacity to build and flaws in current U.S. policy (i.e., chip export controls).

Malaysia. The Association of Southeast Asian Nations (ASEAN) convened the ASEAN Malaysia AI Summit in Kuala Lumpur. Secretary-General of ASEAN, Dr. Kao Kim Hourn, announced during the summit that he expects the ASEAN AI safety framework will be established “by early 2026.” The Malaysian government also launched their National Cloud Computing Policy at the summit. Simultaneously, Huawei hosted the Huawei Cloud AI Ecosystem Summit APAC 2025 at which they announced their intent to train 30,000 AI professionals in Malaysia over the next three years. Huawei has been a source of controversy in the U.S. because of its close ties to the Chinese government.

Japan. NTT Data, a multinational Japanese information technology company, announced a strategic partnership with Google to “accelerate enterprise adoption of agentic AI and cloud modernisation.” This is the first time a Japanese company has signed a contract of this nature with Google.

Middle East. AI-related policy developments in the Middle East include:

Saudi Arabia. The Saudi government is doubling-down on AI skills development with a recent partnership announced between the Saudi Data and Artificial Intelligence Authority and Oxford University. The new venture creates an “intensive artificial intelligence application engineering camp aimed at training both Saudi and international graduates in advanced AI technologies.” The Saudi Ministry of Education also launched new AI-related curricula for students and training programs educators.

UAE. Representatives from the UAE Council for Fatwa previewed plans to utilize AI for issuing fatwa (legal opinions on points of Islamic law). The discussion came amidst a conference held in Cairo on the intersection between religious scholarship and AI.

North America. AI-related policy developments in outside of the U.S. in North America include:

Canada. Quebec's government healthcare corporation, Santé Québec, is rolling out a pilot program that uses AI to help doctors transcribe medical notes from patient visits. AI transcription tools remain popular, however they continue to raise concerns with confidentiality and hallucinations.

Mexico. BBVA Mexico is moving to eliminate touch-tone options in its phone service and replacing it with a generative AI system called Blue. The system is meant to help reduce the time it takes for customers to find the assistance they need.

5. What GPT-5 can tell us about AI Evaluations?

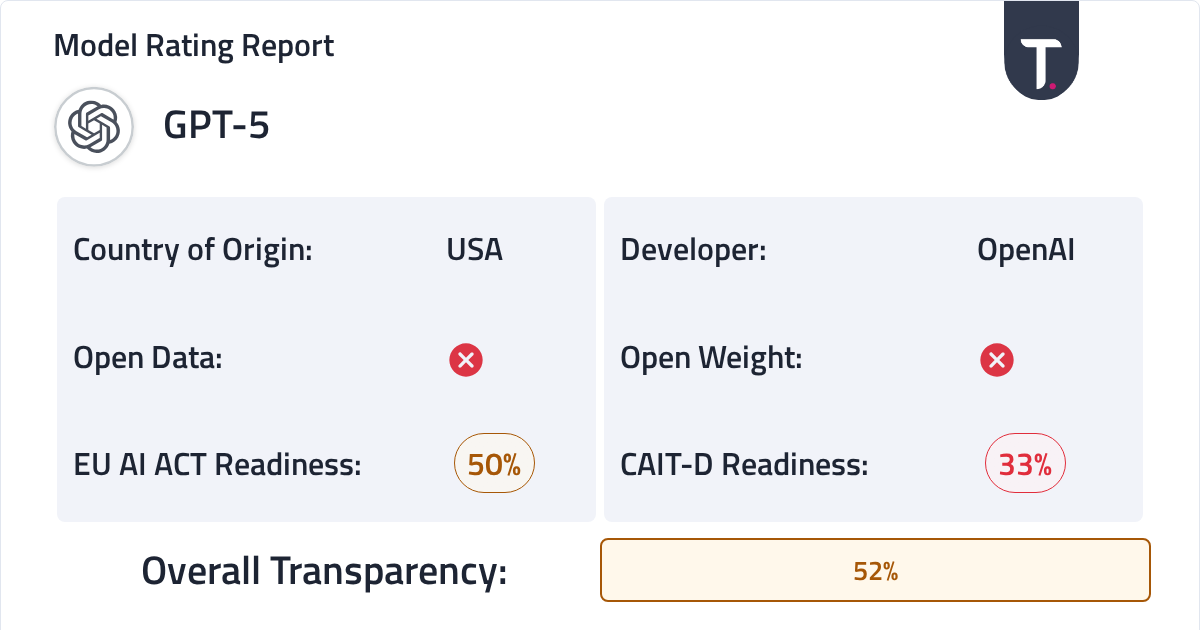

Amidst controversies about misleading graphs and GPT-5’s dramatic change in personalities, Trustible took a deeper look at its System Card and other supporting documentation for the Trustible Model Ratings. As with previous ratings, OpenAI scored poorly on the Data section (covering the topic in only a few vague sentences), but received a High Transparency rating on several Evaluation categories. Their analysis points to a key few good and bad practices for model evaluation:

Real-World Data: OpenAI used ChatGPT production data to complement existing benchmarks. Testing on realistic data provides a more accurate insight into model functionality. It also reduces the likelihood of test data leakage, as many off-the-shelf benchmarks inadvertently end up in training datasets. This works assuming the evaluation production data was explicitly excluded from the training data - GPT models are trained on ChatGPT data (excluding enterprise accounts and people who opted out).

Learning from Audiences: The System Card introduces several new types of evaluations: one for sycophancy (given how an update in May resulted in overly sycophantic behavior) and one for measuring conversations specifically in health conversations. These choices reflect adaptations to concerns discovered during real-world use.

LLM-as-a-Judge: Many of the benchmarks are evaluated using LLM-as-a-Judge. This evaluation method uses a second LLM to check if GPT-5 produced a correct output. The method is more scalable than human review and more flexible than an exact text matching strategy that checks if the model output matches a reference output exactly. However, there are several drawbacks with OpenAI’s approach: they only use o3 as the grader - while best practice recommends using a model from a different family or better yet “LLM-as-a-Jury” aka an ensemble of several models. An additional best practice would be to capture an error range, since LLM judges can also make mistakes.

Underspecified Methodology: Many key details of the actual evaluation process, especially for benchmarks in the press release and not the system card were not included. Small details about prompt formatting or whether each example was run once or several times (LLMs have a lot of variance and some evaluations will run each input multiple times to get a better sense of performance) can significantly affect the final score on a benchmark. Once “tool use” is introduced, results get even more complicated to recreate. While OpenAI acknowledges the latter issue and intentionally chooses to not compare their models to other developers - it’s still not the most transparent approach. Ultimately, external evaluators are the best source of consistent results because they can use the same exact set-up when testing each model.

Key Takeaway: Improved performance on multiple benchmarks does not directly translate to better user experience - many users were unhappy with the GPT-5 update. For practitioners, it is important to evaluate models on data reflective of your unique task. Our review of GPT-5 showed a mix of good and bad practices that can be applied or avoided when doing your own analyses. You can read the full model rating here.

6. Webinar: Building Guardrails for Enterprise AI - Exploring the Databricks AI Governance Framework and Beyond

On September 3rd at 1 p.m. ET, join experts from Databricks, Schellman, and Trustible for an exploration of the Databricks AI Governance Framework, including its principles, architecture, and role in helping enterprises scale AI responsibly. We’ll examine how it aligns with emerging regulations and standards (like ISO 42001, NIST AI RMF, and the EU AI Act), and discuss practical considerations for implementing governance controls and assurance programs across the AI lifecycle, while creating a feedback loop between governance and compliance teams and AI internal stakeholders that can increase and accelerate safe AI adoption.

This 45-minute session is designed to equip technology, risk, and compliance leaders with insights they can apply to operationalize AI governance in their organizations.

Key Highlights:

An In-Depth Look at the Databricks AI Governance Framework and Databricks AI Governance tools: Explore its components, objectives, and how it addresses the unique risks of enterprise AI adoption. Focus on how tools like Unity Catalog and MLFlow can help on the highly technical aspects of AI governance.

Bridging Frameworks to Practice: How organizations can align the Databricks framework, and other standards such as ISO 42001, with other emerging global standards and regulatory obligations.

Operational and Assurance Considerations: Practical insights into implementing governance controls, testing for compliance, and providing assurance over AI systems.

Real-World Perspectives: Lessons from industry practitioners, auditors, and governance experts on avoiding common pitfalls and building resilient AI governance programs.

You can register to save your seat here.

—

As always, we welcome your feedback on content! Have suggestions? Drop us a line at newsletter@trustible.ai.

AI Responsibly,

- Trustible Team