AI Copyright Conundrum Continues As the Era of AEO & GEO Dawns

Plus judging LLM-as-a-Judge as a methodology and our global policy and industry roundup

Happy Wednesday, and welcome back to another edition of the Trustible AI Governance Newsletter! The Trustible team is shipping up to Boston this week for the 2025 IAPP AI Governance Global North America Conference, and teaming up with AI governance leaders from Nuix and Leidos to talk through how to build the perfect AI intake workflow - or are we? If you’re in Boston this week, you’ll have to attend our session on Friday to find out.

In the meantime, in today’s edition (5-6 minute read):

The Great AI Copyright Conundrum Part II

Judging LLM-as-a-Judge

Global & U.S. Policy Roundup

AEO / GEO - The New SEO

1. The Great AI Copyright Conundrum Part II

The summer saw a flurry of developments on the interplay between AI and IP. Two other cases, one against Meta and another involving Anthropic, handed significant victories to big tech and how their AI systems use protected works. As we move into the fall, there is another avalanche of news on AI and copyright litigation.

We previously discussed a lawsuit brought by a group of authors who sued Anthropic for copyright infringement by alleging that the company trained Claude on their protected works without their permission. Anthropic sought to put an end to the case with a $1.5 billion settlement, which would have paid roughly $3,000 per book that they pirated. However, Judge William Alsup rejected the deal because of his concerns with how the deal was struck and the claims process. Judge Alsup will review the deal again on September 25 to “see if [he] can hold my nose and approve it.” Meanwhile, Apple is subject to a new lawsuit by authors who claim that their protected works were used to develop Apple Intelligence. Perplexity was also recently sued by Encyclopedia Britannica and Merriam-Webster over how it uses their material for its “answer engine.”

The Anthropic settlement sought to provide an off-ramp for the dispute at a cost lower than prolonging the litigation. Yet the broader concern (as raised by Judge Alsup) is how this deal came about, given it was done behind closed doors. Generally settlements are not bad, but when genuine legal questions need resolution, a quick fix cash settlement does not quite meet the moment. Moreover, determining the value for using someone’s IP to train an AI system is not a cut and dry equation. Paying IP owners the same amount (as seen in the Anthropic settlement) ignores the fact that some IP is more valuable than others. A recent New York Times op-ed raises legitimate issues over how to put a price on someone’s IP and how someone could effectively “game the system” if all IP is worth the same price.

Key Takeaway: First and foremost - know where your data comes from because you do not want to be accused of violating IP law. Second, we need policymakers to intervene and actually fix the issue because relying on court decisions to effectively make law is not a long-term sustainable solution. If there are growing concerns over the “patchwork of state AI laws” in the US, then there should be greater concerns with the patchwork of legal decisions and outcomes, especially when the US Supreme Court is less inclined to settle these types of questions.

2. Judging LLM-as-a-Judge

Over the last two years, the LLM-as-a-Judge (LLJ) methodology has become a go-to technique in AI development - but a recent paper titled Neither Valid nor Reliable? Investigating the Use of LLMs as Judges by Chenbouni et al. highlights critical weaknesses of this approach. LLJ refers to the practice of using existing LLMs to support development of new AI systems. Common uses include:

Performance Evaluation: Replacing human annotators for reviewing outputs of AI systems. For example, LLJ can be used to judge fluency and professionalism of model outputs or to evaluate safety alignment (many of the tests in GPT-5’s system card rely on this technique).

Model Enhancement: LLJs can assist with training of new models through processes like reward modeling and/or replacing humans in RLHF (where the goal is to select a preferred output from a model).

Data Annotation: LLJs can be used to annotate datasets for model training and evaluation.

LLJs became popular for these tasks because they are considered a proxy for human judgement that can be used cheaply, quickly and at-scale; however, the limitations of this approach are often overlooked. Two key factors to consider are:

Validity: When assessing whether LLJs do in fact agree with human judgement, it is important to consider whether humans themselves can agree on this task. On tasks with a high degree of ambiguity, both the method for measuring agreement and an appropriate threshold may not be clear. For example, the GPT-5 system card mentions a 75% agreement between the LLJ and human assessors - this threshold may suggest that relying on the LLJ alone may not be sufficient.

Reliability: LLJs exhibit a variety of biases ranging from preferring outputs from itself (when used to compare multiple candidate models) to skewed racial and gender preferences in reward modeling. Furthermore, while some modern models can output an “explanation” for their label, this text may not be faithful to the underlying processes. With these factors in mind, a rating for “professionalism” (as in the above example) of text may reflect a number of subjective factors beyond the user-specificed criteria.

Chenbouni’s paper outlines a deeper list of interconnected challenges with the use of LLJs and my. Many of the challenges outlined parallel human evaluation on difficult tasks. Mitigations can include using LLM-as-a-Jury (an ensemble of diverse LLJs) and carefully reviewing LLJ outputs for biases and consistency before deploying them at scale.

Key Takeaway: In an ecosystem where the pressure to deploy AI systems quickly is high, LLJs can be seen as a quick win for evaluation; however, concerns around validity and reliability suggest that they themselves should be carefully evaluated for appropriateness for a given task. When using LLJs, practitioners should examine the task itself for subjectivity (for example by evaluating human inter-annotator scores on a sample), evaluate the model outputs against a large gold-standard dataset, analyze the errors for systematic biases, and when possible uses an ensemble of LLJs (i.e. LLMs-as-a-Jury.)

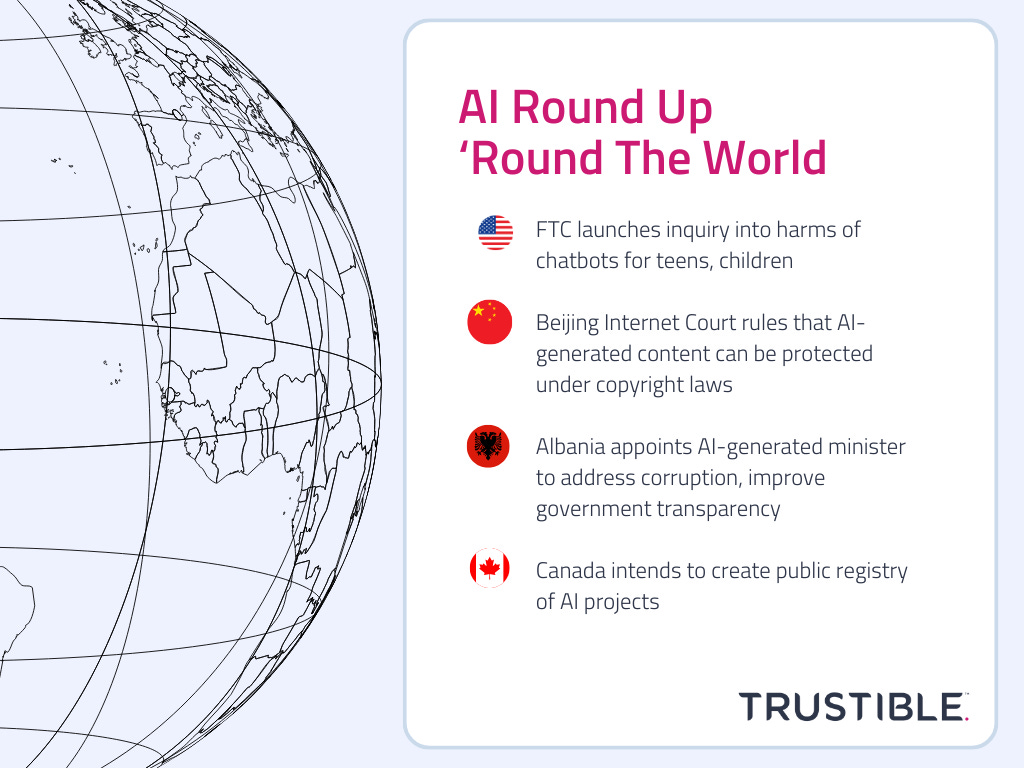

3. Global & U.S. Policy Roundup

U.S. Federal Government. AI-related policy developments across the federal government include:

White House. Office of Science and Technology Policy (OSTP) director Michael Kratsios teased the White House’s AI policy plans. OSTP will solicit input from the public on “federal regulations that they think hold back the development and deployment of AI.” President Trump also hosted CEO’s from all the major tech companies to discuss AI innovation.

Federal Agencies. The FTC launched an inquiry into how certain companies deploy their chatbots, with a particular focus on harms posed to teens and children. The inquiry is not a formal investigation and seeks to obtain information from seven AI companies. The Government Accountability Office released a report outlining 94 AI-related requirements for the federal government.

Congress. Senator Ted Cruz (R-TX) is back in the news declaring that the AI moratorium on state and local laws is “not dead at all.” It is unclear what Cruz meant by his comments, as the moratorium language was removed by the Senate from the Republicans reconciliation bill earlier this summer. Cruz also introduced the SANDBOX Act, which would permit AI developers and deployers to seek waivers for regulations that could “impede their work.” OSTP would coordinate with federal agencies to evaluate requests within the scope.

U.S. States. AI-related policy developments at the state level include:

California. The state legislature passed SB 53 with a veto-proof majority. The bill is a reincarnation of last year’s SB 1047 with a far narrower scope that requires foundation model providers to implement and disclose safety and security protocols for their models.

Michigan. The Michigan Chamber of Commerce came out against a proposed bill in the state legislature that would comprehensively regulate AI in the same vein as Colorado’s AI law. The Chamber called the bill “well-intentioned” but favored a federal AI law.

Ohio. The state’s Department of Homeland Security launched a new reporting system that uses AI to disseminate information about potential threats of violence. Users can upload photo, video and audio of alleged suspicious activity, which is reported and reviewed by analysts at the Statewide Terrorism Analysis and Crime Center.

Texas. Secretary of Education, Linda McMahon, visited a private school in Austin, TX that is relying on AI to help teach its students. The Secretary also participated in a roundtable discussion about “AI literacy and the evolving role of technology in education.”

Africa. The South African government hosted a forum to discuss creating a “National Artificial Intelligence Network of Experts.” Uganda launched the Aeonian Project, which intends to build Africa’s first “AI factory.”

Asia. AI-related policy developments in Asia include:

China. The Beijing Internet Court released decisions in eight AI-related cases. Notably, the court found that AI-generated content can be protected under the country’s copyright laws and that platforms using algorithms to detect and remove AI-generated content must provide “reasonable explanations” for their decisions. While these cases are significant, they are non-binding because China follows a civil law system.

Kazakhstan. The President of Kazakhstan announced plans for a new Ministry of Artificial Intelligence and Digital Development to address some of the emerging threats posed by AI technologies.

South Korea. The Ministry of Science and Information and Communication Technology released a draft decree to implement South Korea’s AI Basic Act. The draft decree requires certain disclosures for developers of generative and high-impact AI, as well as establishes safety assurance standards for high-performance AI.

Europe. AI-related policy developments in the Europe include:

Albania. The Prime Minister appointed an AI-generated minister to address corruption and improve government transparency. The minister, known as Diella, was created with help from Microsoft.

EU. The European Commission announced that the forthcoming "digital omnibus” will cover “targeted adjustments” to the EU AI Act. At the same time, the “stop the clock” movement got a notable boost from former Italian Prime Minister, Mario Draghi, who argued that the EU AI Act should be paused to assess potential “drawbacks.” Poland also is proposing a 6 to 12 month delay for high-risk AI system penalties under the EU AI Act. Mistral also announced that it raised €1.7 billion in its most recent funding round and secured a strategic partnership with Dutch semiconductor company, ASML.

UK. OpenAI and Nvidia plan to announce a large investment in AI infrastructure as part of President Trump’s state visit towards the end of September. The exact dollar amount has not been disclosed, but the deal includes commitments from the UK government to supply energy, whereas OpenAI will provide “access to its AI tools and technology” and Nvidia will supply “the chips used to power AI models.”

Middle East. The UAE sent local and federal chief AI officers to meet with representatives from the US tech industry in Silicon Valley. The Institute of Foundation Models at Mohamed bin Zayed University of Artificial Intelligence and G42 also launched K2, described as a “leading open-source system for advanced AI reasoning.”

North America. AI-related policy developments in outside of the U.S. in North America include:

Canada. The Canadian federal government intends to create a public registry for its AI projects. OpenAI is also seeking to move its copyright case out of Canada and to the US, arguing that it does not do business there and is not subject to Canadian copyright law.

Mexico. The Chamber of Deputies and Senate are working on a comprehensive AI law that leverages existing law with new requirements to address risks from AI technology. Mexico’s work on a national AI law comes at a time when other countries are abandoning or delaying their efforts on similar legislation.

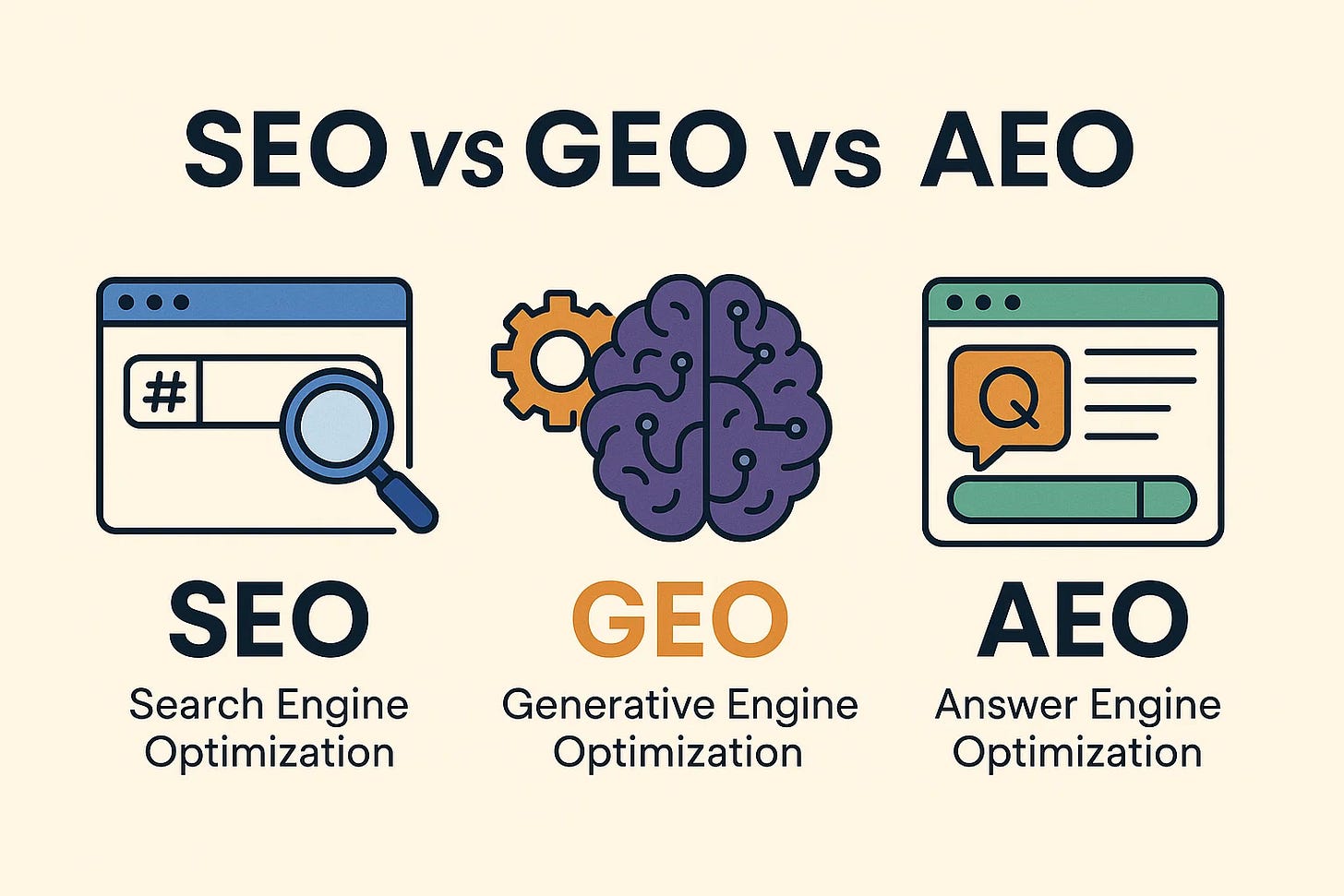

4. AEO / GEO - The New SEO

In the pre-AI internet era, most businesses lived or died at the hands of algorithms owned by search giants that determined how webpages showed up in internet searches. An entire field of tools and services, called Search Engine Optimization (SEO), developed to backwards engineer the search algorithms and optimize a website’s ability to rank high in search results. As we enter the AI era, a brand new category is being created around optimizing content for AI-answer engines.

There are two variants to AI-optimization, but are often conflated with each other: Answer Engine Optimization (AEO), and Generative Engine Optimization (GEO). AEO is geared towards appearing in the ‘answer box’ that now shows above many traditional search platforms. These aim to provide a single answer for any given query. GEO in contrast is focused on the experience inside of AI chat platforms entirely. Most major consumer AI platforms like ChatGPT have the ability to pull in various search results or have existing indexed content, and use them to generate answers. Pulling data from the internet can both reduce hallucinations, and (probably) also help provide some legal liability mitigations in the US, and search platforms that show user generated content have traditionally been protected under Section 230.

While there is no shortage of potential market impacts from this, there are two major points of importance here for AI Governance professionals. The first is that the way content is written and optimized for the web will have to change. Whereas SEO was heavily focused on backlinks for page authority, AI systems may be optimized for different kinds of direct information. Dozens of startups have emerged to help organizations with this task, and unsurprisingly many groups are also looking for AI to help. How a brand is presented in an AI tool, could become an area of concern for AI governance teams who may have the expertise on how to support the business as it navigates AEO/GEO issues. The second, is to be aware that the answers from an AI chat system can be influenced in the same ways as search. Google has already discussed potential ‘sponsored answer results’, there likely will be over time, and in the meantime, any ‘answer’ from an AI system that conducts internet searches should be viewed with the same level of scrutiny as a regular search engine.

On the inverse, GenAI content is a cause that many brands are seeing their content visibility decline sharply (both in traditional SEO and GEO.) Search engines and LLMs are prioritizing human, organically written content for rank due to its unique and novel quality, where content developed by LLMs are ranked as a lower quality. We’ve covered this in the past on AI our coverage on AI slop. This presents a balancing act, where AI can help accelerate content development and visibility in the era of AEO/GEO, but overreliance can actually do more harm than good.

Key Takeaway: AI tools may quickly become the ‘gatekeepers’ of information. What they say about companies, brands, or services, could quickly be accepted as canonical. Many AI tools will only give a few answers to questions like ‘What’s the best newsletter platform’, and so the competition to be in the AI’s answers will be fierce, and could quickly impact entire markets.

—

As always, we welcome your feedback on content! Have suggestions? Drop us a line at newsletter@trustible.ai.

AI Responsibly,

- Trustible Team