In deepfakes we trust?

Plus, guidelines for GenAI risk management, EU AI Act updates, and AI governance in insurance.

Hello! In today’s edition (5 minute read):

Taylor Swift and President Biden deepfakes expose vulnerabilities in detection

A guide to categorize & mitigate Generative AI risks

Updates on the EU AI Act: it’s almost finalized

Operationalizing AI governance in the insurance sector

Trustible selected to contribute to NIST AI Safety Institute Consortium

If you missed our Linkedin Live on how the FTC is shaking up AI, here’s the recording. 3 big takeaways:

The FTC is attempting to stake its claim on regulating AI through its recent activity.

Expect to see the FTC use enforcement actions as a way to rein in egregious AI use cases.

Companies need to start thinking about AI governance structures now to avoid regulatory scrutiny.

1. AI deepfakes will be a major issue in 2024

Over the past 2 weeks, social media platforms (in particular X, formerly Twitter) were overwhelmed by sexually explicit deep fake images and videos of Taylor Swift. At the same time, primary voters in New Hampshire were receiving deepfake voice calls from President Biden. AI generated fake images have quickly become easy and cheap to generate, as well as high enough quality to deceive many untrained users. The latest incident with Taylor Swift and President Biden underscores key fears that deep fakes and other AI uses will have damaging effects on this year’s many global elections. Few countries have clear protections against copying someone else’s likeness, and often takedowns are based on breach of a social media platform’s terms of service, not because of any existing legal protections. This may be changing as regulators, including the FCC and FEC, race to introduce new rules, but their ability to enforce these new rules is in question. Detecting these deepfakes will likely only get more difficult over time, but here’s a quick view on the state of generated content and our ability to detect it:

Images

What: Generating an image that looks like someone else

Detection Ability: Moderate - Some generation techniques leave clear signs such as garbled text, extra body parts, or fuzzy sections, although these can be cleaned up easily by photo editing professionals.

Video

What: Generating a video portraying someone else, may be ‘grafted’ onto a real video

Detection Ability: Decent - Many low cost current generation techniques leave clear signs of AI use; many generated videos fall victim to the ‘uncanny valley’

Voices

What: Mimicking another person’s voice

Detection Ability: Low - Often the clearest signs of a fake come from the word choice used, not the actual sound of someone’s voice, making detection here particularly difficult.

Key Takeaway: The legal landscape surrounding deepfakes is extremely weak. Our ability to detect them may be lagging the technology to create them, and it’s unclear how the prevalence of them on social media may impact elections in 2024.

2. Generative AI Risks & Considerations

Speaking of AI risks…

Traditional statistical and machine learning models have been used in organizations for many years. Model risk management teams have been effective at identifying, managing, and mitigating many of their risks to ensure they are compliant and performing to their intended purpose. However, in the age of Generative AI most models are licensed, meaning the risks may be transferred from the model provider to the organizational deployer. However, there is not much historical research and best practices around Generative AI risk management and mitigation practices.

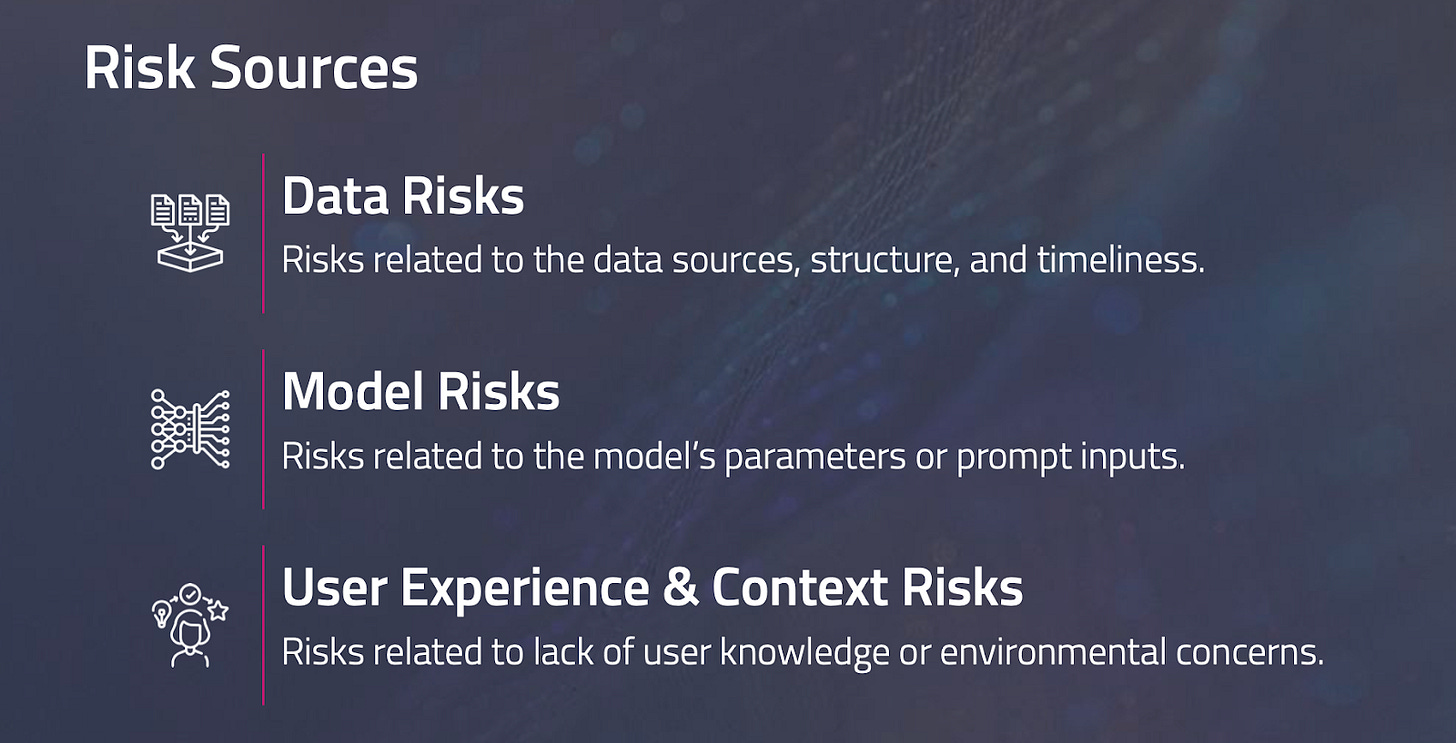

Trustible’s research has identified three primary ‘sources’ of generative AI risks, including those stemming from the training data used for the model, the composition and outputs of the model itself, and then the context and user experience in which a model is deployed. Within each of these three risk sources, we outline 19 of the most prominent risks types, and some potential guardrails or mitigation efforts an organization may take to build trust in their AI system.

3. EU AI Act nears completion with unanimous Vote

On Feb. 2, the EU’s 27 member state ambassadors unanimously approved the final version of the EU AI Act. This decision followed weeks of detailed discussions to finalize the law, based on an initial agreement from Dec. 2023. Although some countries, especially France, Germany, and Italy, had concerns and tried to make changes, they all eventually supported the final text, although France’s support came with “strict reservations.” The next step is a vote in the EU Parliament, planned for early April. If passed, the AI Act will officially become law 20 days after it's published in the EU's Official Journal.

Our Take: As expected, the last minute efforts to alter the legislative text failed. Last week's vote sets the stage for an expected entry into force by early summer 2024, which means certain provisions (i.e., prohibited AI systems) will likely take effect between Q4 2024 and Q1 2025. With over 85 Articles and 13 Annexes, the EU AI Act poses a massive compliance undertaking that businesses need to begin considering now.

4. How do you actually operationalize AI Governance?

Federal regulators have largely focused on issuing guidance and initiating inquiries into AI, whereas state regulators have taken a more proactive stance, addressing AI's unique challenges within sectors such as insurance.

The

NY Department of Financial Services released a draft guidance letter proposing standards for identifying, measuring, and mitigation potential bias from use of ‘External Consumer Data and Information Sources’ for underwriting and pricing. This proposal similarly mirrors a regulation already in effect in Colorado that was finalized last year. The National Association of Insurance Commissioners released their model bulletin on AI risk management last year, and we expect further states will announce similar proposed regulations. These proposed rules likely won’t face the same level of uncertainty that stems from the legislative process.

This means that operationalizing AI governance in the insurance sector is a need-to-do.

Join us on Wednesday, February 28 at 12:00 P.M. EST / 9:00 A.M. PST for a Linkedin Live. Register here.

Speakers:

Andrew Gamino-Cheong – Co-Founder & CTO, Trustible

Tamra Moore – VP & Corporate Counsel, Data, Privacy, & AI, Prudential

Shontael Starry – AI Ethicist; Data Scientist, Nationwide

Ellie Jurado-Nieves – VP & Asst. General Counsel, Strategic Public Policy Initiatives, Guardian Life

Agenda:

Current state of laws and regulations for AI in insurance

Challenges with complying with Colorado Reg 10-1-1

How bias and fairness may differ between different types of insurance

Best practices for how technical and non technical teams can better collaborate for AI governance

5. Trustible Selected to Contribute to NIST AI Safety Institute Consortium

Trustible was selected to collaborate with the National Institute of Standards and Technology (NIST) new Artificial Intelligence Safety Institute Consortium to establish a new measurement science that will enable the identification of proven, scalable, and interoperable measurements and methodologies to promote development of trustworthy Artificial Intelligence (AI) and its responsible use. NIST does not evaluate commercial products under this Consortium and does not endorse any product or service used. Additional information on this Consortium can be found here.

We’re looking forward to sharing our experiences helping organizations adopt and operationalize the NIST AI RMF, our research on AI incidents and related harms, and best practices for AI transparency.

***

That’s it for today! We welcome your feedback on this edition and what you’d like to see going forward.

- The Trustible Team