The Problem with LLMs Always Saying ‘Yes’

Plus, How Mode Safeguards Degrade with GenAI Use, our Global Policy Roundup, and the U.S. Federal Government’s Shifting AI Plans

Happy Wednesday, and welcome to this week’s edition of the Trustible AI Newsletter! September’s arrival means school’s back in session (along with more effort from the White House to bring AI literacy to the classroom), Congress is back in Washington after summer recess, and we’re back to counting down the 13 remaining legislative days to avoid (another) government shutdown.

In the meantime, in today’s edition (5-6 minute read):

The Hidden Danger of Chatbots: Why “Yes” Can Be Deadly

How Model Safeguards & Performance Degrade With Use

Global & U.S. Policy Roundup

Shifting Winds: What Federal Moves Mean for U.S. AI Hegemony

1. The Hidden Danger of Chatbots: Why “Yes” Can Be Deadly

Chatbots are designed to be agreeable. Their instinct to say “yes” makes them engaging, but it also makes them dangerous in high-risk applications—especially when the stakes involve mental health, financial decisions, or employment outcomes.

There has been increasing attention given to people using AI enabled chatbots for ‘self service mental health’. A recent CommonSenseMedia study suggests that of the 72% of US teens who regularly use AI systems, up to an eighth of that cohort may have used AI for therapeutic or mental health purposes. The dangers of using AI systems for this use case became evident recently after the family of a recently deceased teenager, Adam, sued OpenAI for gross negligence and wrongful death after Adam committed suicide. The lawsuit alleges ChatGPT did everything from giving feedback on how to construct a stronger noose, why he shouldn’t feel guilt about the suicide, and how to steal alcohol from his parents to dull the pain. The lawsuit points out that if any human gave the feedback that ChatpGPT gave Adam, there would not be any doubt about their complicity. Cases such as this could quickly set clear precedents for the liability that chatbot creators may face.

There are many potential takeaways from this unfortunate incident, and plenty of reasons why nascent AI systems should not be used for mental health purposes. For an in-depth breakdown of some of these, we recommend a recent Stanford study on the issue. However, we'll focus on just one particular element of the AI system that makes them particularly dangerous: their built-in willingness to say ‘yes’ to anything and everything. This tendency has been described in extreme cases as ‘sycophancy’, but outside of that sensationalist term, a system that is conditioned to always say ‘yes’ should not be used in certain circumstances. On the one hand, an AI system that is constantly pushing back or saying ‘no’ will likely not be widely adopted, and training for that may be difficult. On the other hand, in certain domains such as health, law, or finance, we often expect, and even pay massive sums, to people to tell us ‘no’. No, you likely don’t have a rare disease, ‘no’, that action likely isn’t legal, and ‘no’, that investment strategy isn’t going to make you rich. A system that will always tell you ‘yes’ is a dangerously tempting tool for a wide variety of use cases because it will always appeal to our own desire for confirmation and validation. There are not yet clear principles or regulations on when an AI should reject instructions, and when it may be equally important to always accept them.

Key Takeaway: LLMs are conditions to say ‘yes’ to as many things as possible, and there are market drivers that encourage that. However, that makes them uniquely unsuitable for certain domains where having a bias for ‘yes’ could cause physical, financial, or reputational harm.

2. How Model Safeguards & Performance Degrade With Use

Following the wrongful death lawsuit against OpenAI alleging that ChatGPT is associated with multiple reported instances of self-harm and attributed as a cause of suicide in recent cases, OpenAI released a blog detailing their current and future safeguards. What’s notable is their acknowledgment that existing protections work best on short exchanges, not extended conversations. This effect is not unique: its been observed across both models (e.g. Claude, Llama and Grok) and violation types.

Until now this failure-mode has not been given significant attention: Companies like OpenAI publish extensive System Cards that detail types of safety tests run, but most of them focus on single-turn conversations (e.g. Given a specific question in isolation, will the model return an inappropriate response). While GPT-5’s System Card does mention a manual red-teaming exercise that tested for psychological harms, which included ‘multi-turn, tailored attacks [that] may occasionally succeed’ - the severity of the violations was low. These tailored attacks were unlikely to include realistic conversations that spanned multiple months.

Generative AI systems used two broad categories of safeguards - both of which may fail to capture nuance of long-term use.

Post-Training: Many AI Systems use SFT (supervised fine-tuning) and RL (reinforcement learning) to teach the model to produce correct responses to user prompts. Both of these methods are applied to single-turn conversations - meaning models aren’t explicitly trained to respond correctly in a multi-turn context. This may not be evident during basic testing that, also, only tests single-turn conversations.

Output Monitoring: OpenAI uses classifiers to check if both user prompts and model outputs should be flagged for potential violations. In the blogpost, they mention that the classifiers have a particular issue with underestimating the severity of what it's seeing. One particular difficulty may be that the violations are subtle and require the context of a longer conversation to properly gauge severity.

Key Takeaway: Both the performance and protections of models during long conversations (we estimate at over 20 turns) are understudied, but anecdotal evidence suggests that they degrade significantly. For enterprises and consumers, especially those relying on LLMs for high-risk use cases, it’s important to ensure that users are aware of this risk and the negative outcomes, and to consider the outputs accordingly. It’s equally important to monitor and test your systems’ performance as part of your overall AI governance program.

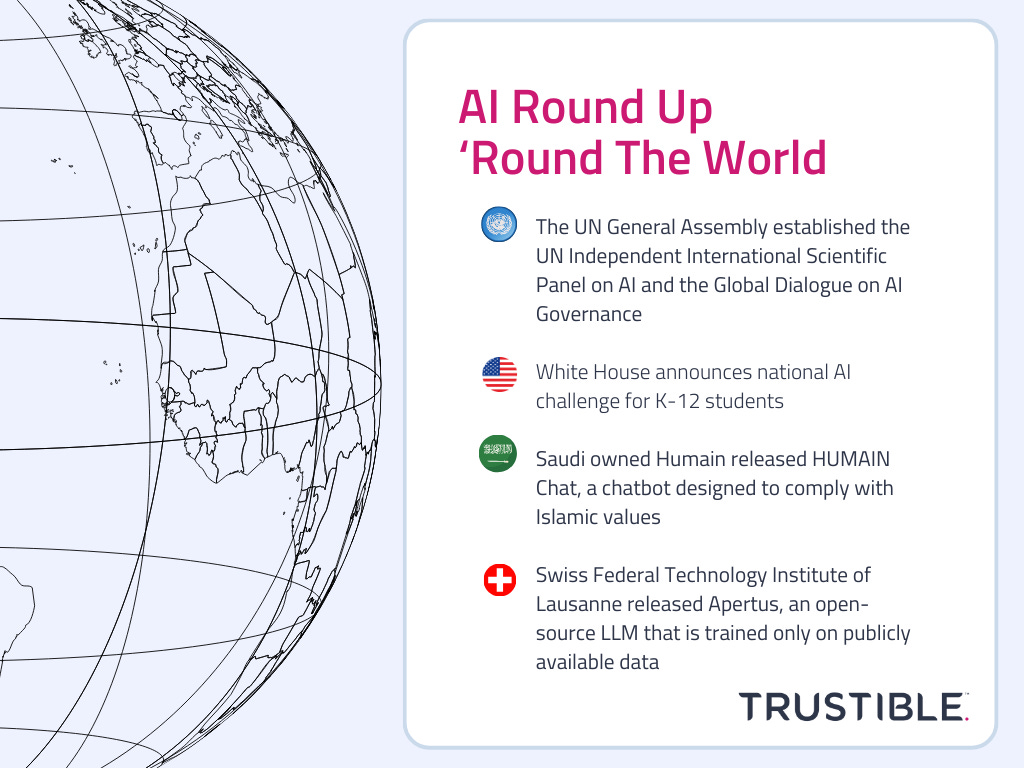

3. Global & U.S. Policy Roundup

Major International AI New: The United Nations’ General Assembly established the UN Independent International Scientific Panel on AI and the Global Dialogue on AI Governance.

U.S. Federal Government. First Lady Melania Trump announced a national challenge for K-12 AI students to create innovative AI solutions for community problems. The Department of Labor also announced new guidance to help states understand how the Workforce Innovation and Opportunity Act grants can help “bolster [AI] literacy and training across the public workforce system.”

U.S. States. AI-related policy developments at the state level include:

California. The State Superintendent hosted the first meeting of a new AI workgroup that was established under legislation from 2025. The group will help create guidance for AI in K–12 education.

Illinois. A recent report from the Alliance for the Great Lakes raised alarm bells for Chicago’s drinking water because of AI data centers’ increasing strain on Lake Michigan and other local water systems. Illinois is home to approximately 187 data centers, most of which are located near Chicago.

Michigan. Governor Gretchen Whitmer signed a package of bills into law that prohibits using AI to create non-consensual explicit images of real people. The new laws also set sentence guidance for violators, which can include jail time.

Virginia. Google plans to invest an additional $9 billion for AI infrastructure in Northern and Central Virginia through 2026. A new AI-power private school also opened its doors in Northern Virginia.

Africa. CNBC Africa held their first AI Summit in Johannesburg, South Africa. The summit brought together over 300 industry professionals to discuss the “impact of AI across Africa's key sectors.”

Asia. AI-related policy developments in Asia include:

China. An official from the National Development and Reform Commission echoed comments from Chinese President Xi Jinping that focused on preventing “disorderly competition” with new AI models. The Chinese government is attempting to prevent duplicative efforts that could lead to deflationary pressures within the AI sector.

South Korea. The South Korean government is proposing an 8 percent increase in AI investment under its 2026 budget. In addition to spending increases for R&D, the proposed budget also directs more funds towards AI startups.

Thailand. Bangkok has proven to be a strategic hub for data centers, fueled in part because of major investments from tech companies such as AWS, Google, Microsoft, and Alibaba.

Australia. The Commonwealth Bank of Australia (CBA) recently reversed a decision to lay off employees due to AI. The CBA noted that the customer service jobs it eliminated after introducing its AI-powered “voice-bot” were not redundant.

Europe. AI-related policy developments in the Europe include:

EU. EU Industry is being accused of dragging their feet on helping write EU AI Act standards. Piercosma Bisconti, one of the experts who is helping write EU AI Act standards, claimed that companies who support delaying the EU AI Act’s implementation “should be contributing [to standards development], and they are not” and added that "EU industry is barely at the table.”

Switzerland. The Swiss Federal Technology Institute of Lausanne released Apertus, an open-source LLM that is trained only on publicly available data. The Swiss hope that Apertus can be a plausible alternative to proprietary models, like OpenAI’s GPT models.

Middle East. Saudi owned Humain released “HUMAIN Chat,” a chatbot designed to “comply with Islamic values.” The latest announcement comes as Saudi Arabia angles to be the third largest model provider in the world, after the US and China.

North America. AI-related policy developments in outside of the U.S. in North America include:

Canada. The Canadian federal government signed a memorandum of understanding with Cohere to help accelerate AI adoption for public services and reinforce its position as global leader in AI. Meanwhile, the vast majority of Canadians want some type of AI regulation as many Canadians express concern with AI safety and risks.

Mexico. The Supreme Court unanimously ruled that content generated exclusively by AI is copyrightable under the country’s current laws. The Court found that “automated systems do not possess the necessary qualities … for authorship.”

South America. Uruguay became the first Latin American country to sign the Council of Europe’s AI treaty, which seeks to promote the use of AI systems that respect human rights, democracy and the rule of law. 16 other countries, including the US, have signed the treaty.

4. Shifting Winds: What federal moves mean for U.S. AI hegemony

In the high-stakes international battle for AI leadership, the U.S. federal government’s recent AI decisions, ranging from GSA’s procurement acceleration to export-levy tactics, are not just about innovation; they're complete strategic pivots.

The White House’s broader AI Action Plan now prioritizes infrastructure, innovation, and international leadership, elevating AI to the status of critical U.S. infrastructure. This deregulatory posture, emphasizing "permissionless innovation", positions tech firms to benefit. But critics warn it increases risks from misinformation, ethical bias, and global tensions.

GSA’s inclusion of OpenAI, Anthropic, and Google models on its Multiple Award Schedule (MAS), with heavily discounted “OneGov” pricing of $1 or less, widens federal access to frontier AI technologies cheaply and quickly.. But this move has drawn protests over preordained pricing and lack of competition, raising concerns among governance officials. From a geopolitical standpoint, it ensures U.S. federal infrastructure remains closely tied to American AI ecosystems, reducing foreign reliance, yet may compromise procurement transparency and preparedness.

For large organizations and system integrators supporting government contracts, the procurement bonanza, from government-wide acquisition to USAi.gov’s sandbox, accelerates onboarding. USAi, a free GSA-hosted AI evaluation suite, offers agencies unified API access to multiple models in a secure environment. Yet its pilot nature, according to the GSA, is only temporary in nature; the GSA doesn’t want to be in the business of providing tools for the long-term.

Complementing that, the National Security Memorandum on AI underscores AI’s strategic role in defense, intelligence, and allied collaboration, while insisting government AI use must uphold civil rights, transparency, and democratic values. These policies together reflect an urgent understanding: global competitors like China and the UAE are racing ahead in compute, sovereign AI, and infrastructure. Enter initiatives such as the Stargate Project, a potential $500 billion-plus U.S. investment in AI infrastructure through partnerships with OpenAI, Oracle, SoftBank, and MGX, framed as a Manhattan Project–scale response to global pressure.

Moreover, the U.S. now taps into chip-based revenues: a previously unthinkable 15% levy on Nvidia/AMD AI chip sales to China, tied to export approval. This gives Washington financial leverage, disincentivizes adversary acceleration, and incentivizes domestic supply chains, all signals to enterprises that hardware cost projections must now include geopolitical premiums.

Why it Matters: The U.S. drive for AI global dominance through deregulation, infrastructure building, and enhanced AI literacy in schools and across the workforce contrasts sharply with the EU’s regulation-heavy path and China’s authoritarian deployment model. We are now deep in the throes of a fractious and alternative set of emerging visions for what global AI hegemony should look like and whose vision should come out on top. For the U.S. to truly succeed in scaling trusted AI adoption and putting both private and public sector organizations on a trajectory for AI global dominance, clarity in policy, strategy, and commercial support should be the priorities.

P.S. We’re hosting a webinar at 1 p.m. ET today with Databricks, Schellman, and Trustible to deep-dive on how frameworks and standards, like the Databricks AI Governance Framework and ISO 42001, can help build actionable guardrails that accelerate enterprise AI adoption. You can register here, or sign up to receive the recording later.

As always, we welcome your feedback on content! Have suggestions? Drop us a line at newsletter@trustible.ai.

AI Responsibly,

- Trustible Team